Caching Hudl’s news feed with ElastiCache for Redis

Every coach and athlete that logs into Hudl immediately lands on their news feed. Each feed is tailored to each user and consists of content from teams they are in as well as accounts they choose to follow. This page is the first impression for our users and performance is critical. Our solution: ElastiCache for Redis.

Caching Hudl’s news feed with ElastiCache for Redis

Every coach and athlete that logs into Hudl immediately lands on their news feed. Each feed is tailored to each user and consists of content from teams they are in as well as accounts they choose to follow. This page is the first impression for our users and performance is critical. Our solution: ElastiCache for Redis.

Every coach and athlete that logs into Hudl immediately lands on their news feed. Each feed is tailored to each user and consists of content from teams they are in as well as accounts they choose to follow. This page is the first impression for our users and performance is critical. Our solution: ElastiCache for Redis.

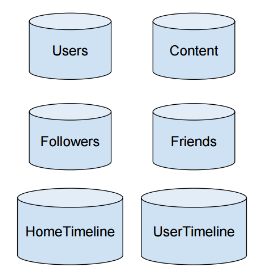

Before we talk about caching though, it’s important to understand at a high level the data model used in the feed. There are 6 main collections used by the feed:

To help illustrate this, let’s look at two users: Sally and Pete. Sally decides to follow Pete. We now say that Sally is a follower of Pete and that Pete is a friend of Sally. When Pete posts something, that post (aka content) gets added to his user timeline as well as the home timeline for Sally. When Sally logs into Hudl to view her feed, she sees her home timeline presented in reverse chronological order. If she then clicks on Pete, she views his user timeline and can view all the posts he’s created.

Let’s take a look now at what gets loaded every time a user hits their feed. We first grab a batch of post IDs from their home timeline, fetch those posts, and load all users referenced by those posts. Since the feed was created back in April 2015, the DB has grown rapidly and the total size is up to 120 GB. We currently have 18 million follower relationships and 30 million pieces of content. So where does caching come into play?

Redis

In the world of caching, there are primarily two options: Memcached and Redis. For the longest time at Hudl, the default option was Memcached. It’s a proven technology and had previously served the vast majority of needs across our services. However, with the introduction of the news feed, we decided to dig a little deeper into the data structures Redis had to offer and we’re really excited by what we found:

Lists

This alone would’ve been reason enough to use Redis. Timelines are naturally stored as lists so being able to represent them that way in cache is amazing. As posts are added to timelines, we simply do a LPUSH (add to the front) followed by a LTRIM (used to cap the list at a max size). The best part: we don’t have to invalidate the cache as posts are added because it’s always being kept in sync with the DB.

Hashes

Displaying the number of followers and friends for a given user is a critical component for any feed. By storing these as fields on a hash for each user, we can quickly call HINCRBY to keep the values in sync with the DB without the need to invalidate the cache every time a follow or unfollow happens

Sets

We love to use RabbitMQ to retry failed operations. Sets are the perfect way for us to guarantee we don’t accidentally insert the same post on a user’s timeline more than once without having an extra DB call. We use the post ID as the cache key, each user ID as the member, and then call SISMEMBER and SADD.

ElastiCache

Once we decided on Redis, the next question was how to get a server spun up and configured so we could start testing with it. We love AWS and had heard about Amazon ElastiCache for Redis as an option so we decided to give it a try. Within minutes, we had our first test node spun up running Redis and were connecting to it through the StackExchange.Redis C# driver.

With ElastiCache, we were easily able to configure our Redis deployment, node size, security groups and use Amazon CloudWatch to monitor all key metrics. We were able to create separate test and production clusters, all without waiting for an infrastructure engineer to setup and configure the servers manually. Here’s what we used for our production cluster:

- Node type: cache.r3.4xlarge (118 GB)

- Replication Enabled

- Multi-AZ

- 2 Read Replicas

- Launched in VPC

The final step in completing our deployment was configuring alerts through Stackdriver. They seamlessly support integrating with the ElastiCache service and within a few minutes, we had our alerts configured. We were most interested in three key metrics:

- Current Connections: if these drop to 0, our web servers are no longer able to access the cache and require immediate attention.

- Average Bytes Used for Cache Percentage: if this reaches 95% or higher, it’s a good signal to us that we may need to consider moving to a larger node type or lowering down our expiration times.

- Swap Usage: if this gets to 1GB or higher, the Redis server is in a bad state and requires immediate attention.

Results

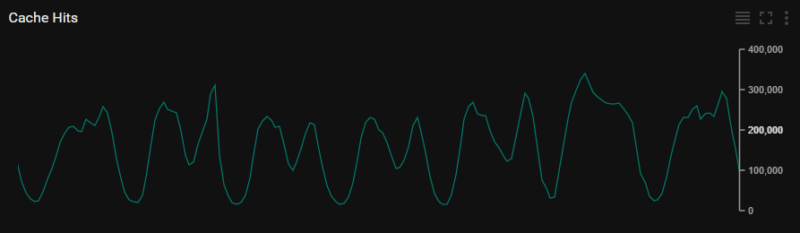

The feed was launched back in April 2015 and since then we couldn’t be happier with its performance. Hudl’s traffic is highly seasonal and football season is our prime time. Starting around August, coaches and athletes from all over the country get back into football mode and log into Hudl daily. During the week of September 5th — 11th, there were 1.2 million unique users accessing their feeds. The feed service averaged 300 requests per second, with a peak of 800. Here are some quick stats from ElastiCache during that same week:

- Total Cached Items: 21 million

- Cache hits: 175K/min (average), 350K/min (peak)

- Network in: 43 MB/min (average), 101 MB/min (peak)

- Network out: 600 MB/min (average), 1.25 GB/min (peak)

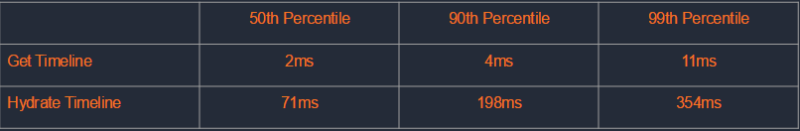

Let’s take a closer took at two calls in the feed service: getting the timeline and hydrating the timeline. The first call looks at just the operation of fetching the timeline list from redis. No other dependencies. The second call takes the post IDs in the timeline and loads all referenced users and posts. It’s important to note that this includes time spent loading records from the database if they are not cached and then caching them. This is the primary call used when loading the feed on the web as well as on our iOS and Android apps.

Based on the success of feed, ElastiCache for Redis is quickly becoming our default option for caching. In the last year, five other key services at Hudl have made the switch from Memcached. It’s easy to setup, offers blazing fast performance, and gives users all the benefits that Redis has to offer. If you haven’t tried it out yet, I would strongly recommend giving it a shot and let us know how it works out for you.